Davide Bevilacqua, who headed this year's AMRO241 festival, connected me with Christoph Döberl last winter, suggesting that our common interest in generative AI could be fertile ground for discussion between us, and that we should consider collaborating to host a workshop at AMRO.

In both Chris' and my private businesses we have been making software involving large language models.2 We're both into tech and tech history, and indeed has the conversation that we have been carrying on in these past months been very illuminating for both of us. Despite our tight schedules, we shared a desire to examine the broader picture together with other tech-critical people like ourselves, and so decided to seize the opportunity to do this at AMRO. Our goal, insofar as we had one, was to collect perspectives and gauge the understanding of a group who we assumed would be primed to the subject matter and could plausibly weigh in on how AI fits into the current state of the broader internet. In other words: we were seeing crazy things happening, and wanted to know if others were seeing it too.

"The Dead Internet Theory", the inspiration for the title of our workshop, was originally the name of a conspiracy theory cooked up on forums like 4chan (possibly 4chan itself). The gist of this theory was that while browsing the web, you're never encountering humans, only bots. At the time of its coinage in the early part of the 2010s, it was fair to call this theory a paranoid fantasy. However, given the recent advances of LLMs and the ensuing rise in automated garbage content, many critics are now revisiting it, since its central premise appears to have become relevant again. We hoped that by applying this lens to the broad range of subjects we wanted to tackle, we could somehow keep the discussion on rails: a gamble, we knew. It speaks to the quality of the people AMRO attracts that we were able to pull this off.

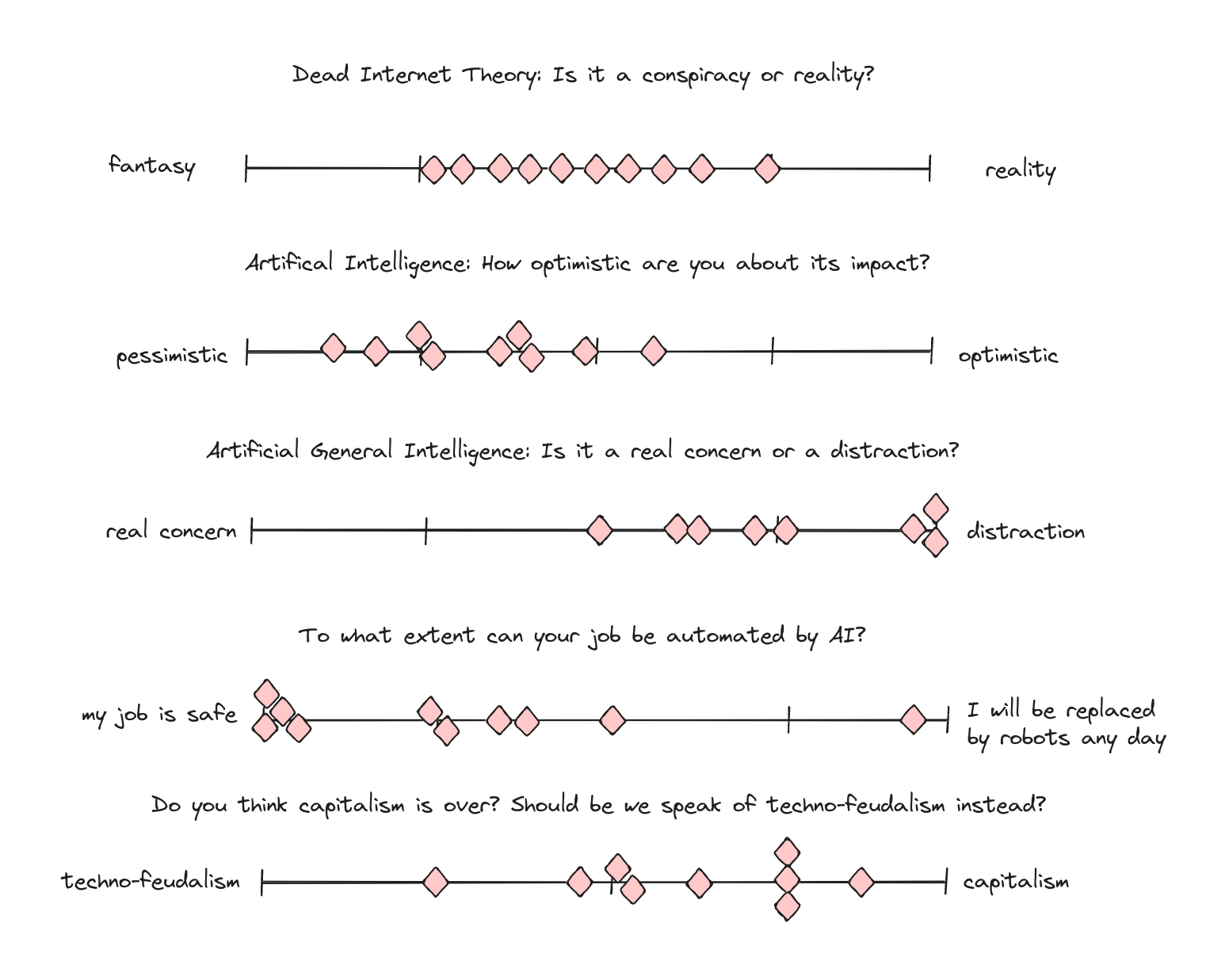

During our first hour, we contextualized the subject, and got acquainted with the 13 participants. We posed broad questions and asked everyone to place themselves throughout the room to represent their positions (see image). While everyone – except for the first question about the Dead Internet being real or a fantasy – strongly tended in one direction or the other, participants were still dispersed enough to promise an engaging discussion. What stood out most to me was the fact that particularly those participants in creative professions saw their work as being at greater risk of being automated.

Next we presented the group with a range of examples, articles, and anecdotes that we had compiled over the past few months and collected in an Excalidraw3 whiteboard. Among this collage were discussions about the inherent limitations of LLMs,4 examples of users abandoning search engines and forums in favor of ChatGPT,5 recent developments in bot traffic on Twitter,6 tools for protecting art from being used as training data,7 the enshittification of science,8 the collapse of online application systems,9 generated disinformation,10 gaffes,11 Shrimp Jesus,12 and more. Before asking participants to take some time to explore these examples, I gave another short primer.

First, I recalled an article written by a teacher,13 in which he expressed his horror over the subtle ways in which LLMs subvert the basic notion of meaning, framing his discussion as an extension of Walter Benjamin's famous essay "The Work of Art in the Age of Mechanical Reproduction". Then, I shared the story of a leaked slide from an internal Spotify presentation that showed, how this corporation's ambition of enclosing and occupying the podcast space was rendered impossible by the simple fact of how podcasts have historically been distributed: that is, via an early web protocol called RSS (really simple syndication). Unlike platforms like YouTube, where users are forced to surrender their data to access the content, podcasts can be served and fetched from anywhere on the web without such a trade-off being enforceable.

After giving participants about 20 minutes to explore the resources Chris and I had collected, we reconvened to gather their reactions, specifically also asking about fears or hopes that their perusals might have inspired.

Few participants had been aware of how wide-spread the flood of generated content on the major platforms has already become, and many were alarmed by it. Picking up on an article14 describing how Twitter's AI bot "Grok" had invented a fake story about a bombing in Tel Aviv, the photographer Ozan expressed concern regarding the further splintering of reality: "It's already difficult to know what's really happening. How can we ever know?" Brendan, an artist who had worked with earlier iterations of NLP15 software and is wary about the hype surrounding AI, brought up Usenet – an internet protocol predating the world wide web – and how that had collapsed due to spam.

Nicolas, a filmmaker who earlier had expressed concern about the possibility of AI impacting his job was especially intrigued by Nightshade,16 a tool that allows artists to apply a watermark to images, which is invisible to humans but would damage any image generating model that uses it as training data. "Assuming it works", the tool could be used to enforce consent with artists.

Selena, a university professor researching the Protohistory of AI pointed out that none of the examples we provided described issues that hadn't existed previously in some form or other. She expressed an expectation that users will broadly learn to adapt by improving their AI/Spam literacy, while also recognizing that the current state of a platform like Facebook is indicative of an internet that isn't dead, but rather a zombie. The latter observation being in reference to an article17 describing how typically older Facebook users are interacting more and more with generated content, bots, and user accounts taken over by bots.

Overall there was an understanding that it wasn't the "internet" per se but rather the major platforms that we too often conflate with the internet itself (in certain regions for lack of alternatives) that were "dying". Further, the platforms currently have no incentive to moderate generated content. One participant expressed her hope that the degradation of these platforms would lead to a renaissance of older decentralized web technologies like RSS, or IRC, when normies seek alternatives to these platforms.

While participants gave their reactions, we noted the topics discussed on cards, and the fears and hopes expressed. To our surprise, we wound up with more hopes than fears. Among the many topics discussed, we asked participants to select topics of their choice to discuss in smaller groups. Two groups formed: one to discuss the possibility of class differences manifesting as "generated content for the poor, human-made content for the rich", and another to discuss the topic of "model poisoning as resistance".

When the two groups reconvened, we asked them to share insights into their discussions. The topic of class inequality in this context was inspired by an article18 discussing a new trend on Amazon of generated books appearing in the orbit of new releases or popular searches, and an accompanying trend on Youtube where tutorials on how to use generative AI to create books (in particular children's books) with no effort and potentially huge returns receive millions of clicks. The author expressed concern over the potential of low-quality generated books landing in front of children whose parents had too little time to vet the authenticity of these books, or public libraries buying such books in bulk due to their affordability, and what developmental side-effects might occur in these children because of it.

The group recognized a heightened need for consumer re-orientation, and that scale and accountability were two important factors in understanding the problem and its potential solutions. To be able to discern between human- and AI-generated content, moderation is essential. At the scale of a platform like Amazon moderation has always been an issue, but due to the heightened AI-induced enshittification (the gradual degradation of a platform), it is reaching new levels of impossibility. Mastodon (a decentralized Twitter-like social network) was named as an example of moderation at scale, whereby networks of trust are used rather than filters of distrust (whitelists vs blacklists).

The second group, discussing "model poisoning as resistance" was very sympathetic to the project Nightshade and its punk-like approach. However, the group felt that the problem of work being stolen and used as training data required more than a techno-solutionist response: rather, the tool might be used to initiate a political discussion. The group drew a parallel to how record labels pushed for DRM in the early 2000s, recognizing a hypocrisy in how copyright is being unevenly applied.

In a somewhat condensed session at the end of our three-hour workshop, participants gave short statements about their take-aways. Some minds were changed: the student and software-developer who was initially most inclined to give credence to the notion that AGI (Artificial General Intelligence) was a real concern now firmly called it a distraction. Many declared to have learned something new, several expressed shock at how far-reaching these issues have become and said they were leaving with more questions than they arrived with. One consensus that seemed to remain between artists, professors, technicians, and students alike, was that these topics required political and social, not technical solutions.

As we concluded, the room was abuzz with discussion. It felt like we struck a chord. For my part, I'd like to express my deepest gratitude to Christoph for seeing this workshop through with me, and to all the unbelievably engaged participants who brought so, so much to the table. I hope you all feel inspired to carry this conversation on so that the theme of AMRO26 might be "Dancing on the Graves of the Dead Platforms".